Within the IT industry, when considering data-at-rest (DAR) encryption, you may have noticed recently that security experts seem a little divided on how to leverage and apply this technology. Many experts have stated that only sensitive data should be encrypted. Others seem to be preaching a “new” gospel that all data at rest should be encrypted. Why this philosophical split and what, if anything, has taken us down this path? Does a right answer exist, and are there advantages to one strategy over another?

I will state upfront as an initial thesis that, historically, obstacles around encryption have driven a lot of its present-day conception and (lack of) acceptance, adoption, and use within enterprises. If we take a quick look back in time as well take as a sampling of some uses of encryption today, I think it’s pretty easy to demonstrate that without these past obstacles, the intermediate and precautionary concept of “encrypt only sensitive data” would never have come into play and enterprises today would be encrypting all data-at-rest as standard operating procedure.

Historical Obstacles to Pervasive Encryption

Pervasive DAR encryption has always held at least conceptual appeal. As companies have faced escalating threats to their data in recent years, the idea of restricting data and information to only those parties with “need to know” status has quickly grown in time as an attractive option. But that attraction and appeal has in the past never been able to find realization due to the sizable obstacles surrounding DAR encryption. Few realize that the dynamics around enterprise DAR encryption have changed such that these ambitions can now be very readily realized.

Taking an encrypt everything approach releases organizations to better and more quickly address expansion needs and develop and adopt efficient and cost-effective operational and scaling strategies while simultaneously and immediately addressing risk…

But before jumping too far ahead, let’s quickly review some of the main obstacles that have driven most companies to apprehension around DAR encryption:

Overhead of Applying DAR Encryption thru Initial Transformation: Data isn’t normally created in an encrypted state. It has to be placed in an encrypted state or go through what we data security experts call “a transformation process.” Due to sheer physics, this process translates into a time exercise as well as classically requiring at least an equal amount of space on-disk to encrypt as what the non-encrypted data occupies. There are exceptions to this, such as when application encryption is used and data is created in an encrypted state. But by and large, most enterprises aren’t nearly at this maturity level in terms of application encryption, and therefore encryption has classically required initial transformation and additional space in order to accomplish this.

Operational Performance Overhead: Encrypting data requires significant computational power to apply the intended cryptographic algorithms to the data to transform it from a decrypted state to an encrypted state and back again. The initial computational overhead in the first obstacle (above) is represented upfront. Operational performance overhead is ongoing; every time data needs to be used, it has to be decrypted on read and then encrypted again after changes are made to that data and it’s written back to disk. This operational overhead has in the past been sized as high as 50%, making encryption of everything a prohibitive choice for anything but the most sensitive of data.

Ease of Use: Aside from customized encryption solutions written into specific applications, wholesale encryption of all data in a standard, easy-to-accomplish, dynamic, and operational fashion has been very difficult to accomplish and still provide consistent, normative, seamless, and transparent access to data across its various forms of access – CLI, GUI, API calls, file, and block I/O, etc.

Lack of Key Management: One of the most necessary and lacking components that has stripped DAR encryption of ease of use has been the lack of key management. Encryption itself has actually always been easy to accomplish. Methods for encryption have existed since before even electronic computing. Take for example the German Enigma machine of WWII, which was based on mechanical encryption. Encrypting data itself has never really been difficult to do per se and per instance of data. Key management, run-time application of the proper keys, and securing and rotating those keys has classically been a big nightmare and one of the main impediments to making encryption easy to use and therefore pervasively applied.

(Incidentally, lazy key management and key rotation ultimately caught up with the Germans and was the key to finally breaking their so-called “unbreakable” Enigma encryption and greatly contributed to us winning WWII. Just think: The whole concept of key management could be sizable enough to attribute to basic freedom and present governments, geographic boundaries, and language distribution! Who knew?!)

Obstacles Lead to Abandonment of Pervasive Encryption

Pervasive encryption then, for all of the reasons above and as attractive as it has been at least conceptually, has largely been abandoned over the years. It’s been too expensive in time, in its computational requirements, in its space requirements, in its operational efficiency, and in its management and overall ease of use. When we consider the historical view of encryption, we find it’s for these reasons and really these reasons alone that experts have touted that only sensitive data should be encrypted.

Enterprise encryption solutions have undergone a tremendous renaissance in the last two to three years.

And if you don’t believe me that underneath, we’re all de facto “encrypt everything” advocates, then consider instances in which encryption and key management have been made transparently useful in the industry.

When storage vendors made full disk encryption an option, especially when aided by hardware, data-at-rest in this form was and has been readily and rapidly adopted industry-wide usually for all data. The only consideration has been additional licensing and fees, but operationally, full disk encryption[1] (essentially a form of “encrypt everything”) has never been viewed as a questionable practice in terms of pervasiveness. The same goes for full end-point encryption or free full disk encryption offered by many Unix and Linux variants.

There are other examples such as cloud and network/over-the-wire encryption (also called “data in motion”) that I won’t discuss here that apply to the proof that underneath, we’d all like to be encrypting everything and “encrypt only sensitive data” has been a response (and an understandable one), historically, to the obstacles surrounding DAR encryption.

The Diminishing Returns on Data Classification

As data has proliferated in the enterprise at astounding speed in the last decade, data classification has strengthened as the primary means to measure enterprise worth and determine risk to the organization when it comes to data.

However, one of the strongest, growing, and lasting reasons and rationales for performing data classification has increasingly been driven by the aforementioned obstacles around DAR encryption. So that only data classified as highly sensitive has provided enterprises with enough incentive to undergo the pain of encrypting that data. Without data classification, you don’t know where that sensitive data sits, what interacts with it, nor what that data represents to your organization in terms of worth and determining risk.

But what if all data were encrypted and protected by strong access controls such that only those persons with a business need to know had access to intended data and only intended data? What if privileged users could be blinded from enterprise data with access only to the metadata (i.e. file names, folder names, dates, sizes, etc.)? How would that change our need for data to be classified? And have we swapped one operational nightmare for another through the exercise of data classification? Would we be able to measure risk differently if we could maintain, model, and provide access to data in a completely new and different way?

Data has reached a zenith in the sense in that realistically, all data is now sensitive and valuable.

For those who state that enterprises should encrypt only sensitive data, this means companies have to undergo a different kind of expense and maintenance overhead through the exercise of data classification.

Let’s consider the painful path of data classification:

What constitutes “sensitive?” Not everyone agrees on what “sensitive” means, so organizations have to spend some time and energy defining what “sensitive” means for them. Compliance standards can help with this exercise only so much. Line of business owners, application owners, technology leaders, and the C-Suite will often still have to meet and come to some consensus on what “sensitive” means for their organization. This takes time. A lot of time.

Then organizations have to begin classifying their data which often leads to a data discovery exercise. A lot of organizations don’t have an accurate picture of where all of their data resides. Data discovery and applying classification again take time and often the amount of data that needs to be sifted through and classified means an additional spend around tooling and resources to help accomplish this.

Once all data has been classified, it’s all but worthless if data can’t maintain classification. A static report on where data sits and how it’s classified is worthless within days (if not hours?!) of producing it; someone in your organization taking and placing sensitive data in an area that represents great risk to the organization can take place and does take place all the time – hourly.

So if organizations are going to invest in data classification, they also have to invest in maintaining and remediating situations in which sensitive data ends up in places where it can be leaked and cause damage to the organization. This usually requires tooling and resourcing and that resourcing usually places additional strain against already over-allocated security resources.

And data classification isn’t just about classifying and discovery. Even what organizations have defined as sensitive will change, often very rapidly in today’s business climate. Data classification overall then is time intensive and costly to produce, execute and maintain because it’s an exercise aimed at a rapidly moving and changing target.

Finally, let’s be real here. If recent breaches have taught us anything, they’ve taught us this: Enterprise data has changed state. Data has reached a zenith in the sense in that realistically, all data is now sensitive and valuable. It’s simply a matter of marrying the right data to the exact right economy and it instantly becomes gold to actors outside of your organization.

Encryption Unshackled

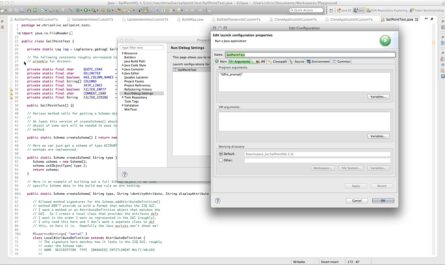

In case you’ve missed it, enterprise encryption solutions have undergone a tremendous renaissance in the last two to three years. Almost all of the aforementioned obstacles have been removed and solved by numerous vendors propelled by hardware advances such as the AES-NI instruction set by Intel and HSM vendors (including our own industry-leading Thales eSecurity HSMs).

And I’m very happy to state that at Vormetric (a Thales company), we’ve solved all of the obstacles I’ve mentioned, including the need for downtime to do the initial transformation as well as real-time, fully automated key rotation and versioning! We can transform and encrypt your data in place, with zero downtime, transparently behind-the-scenes. No other data security vendor can come close to claiming that capability! (This unbelievable, new, patented technology is called Live Data Transformation. Click on the title to learn more!)

So the need to go through the complex, costly, and time-consuming exercise of data classification has mostly been removed. By encrypting everything, we no longer need to worry about:

- What data is sensitive and where it lives. And again, at this point, based on information sold on the dark internet after the breaches of the last 2-3 years, we’re seeing that all data is sensitive in some way anyway.

- How that data will move around in the organization. As I mentioned above, when you classify data, you either end up with a stale report that describes where data was “only moments ago,” and you lose your investment in the classification exercise within days of producing it, or… You have to spend additional dollars on tooling and possibly even full-time resourcing to maintain and remediate when sensitive data moves around in the organization. This is the hidden cost and real burden of doing data classification is the ongoing maintenance and remediation of data. (And so after we actually find the sensitive data we’re just going to “categorize it,” “watch it” and “move it around” instead of actually protecting it?! That strategy, if we can call that a strategy, doesn’t make any sense when we sit down and think about it.)

- Satisfying and maintaining compliance requirements that specify DAR encryption should be utilized as data finds new residence. Of course, you still may have other requirements to meet within various standards under which your organization may need to line up, but for those compliance requirements that specify DAR encryption, by encrypting everything, you’re always in compliance in that regard. When data moves around in the organization, even between on-prem and in the cloud, you’re always in compliance with respect to DAR encryption.

- Reporting data that is leaked when it’s leaked. Experts are touting it’s not a matter of “if” but when you’ll be breached. Hard to believe, I know, but I have to admit and agree based on experience and numerous factors I and other experts are seeing in the industry, that’s probably an axiomatic statement. And most compliance standards maintain that if your data is encrypted, publicly reporting data that was leaked is not required. Think of the savings to your brand and reputation (which alone can amount to millions of dollars) when you have the security of knowing that all your data is encrypted and leaked encrypted data does not have to be publicly reported! Who cares if you happen to “know” that the data was “sensitive data” and not encrypted, but merely “correctly classified?” The data is already gone, in the clear, and now it has to be reported and the damage done. But not if all your data is encrypted. (This is an example of the difference between perimeter-based and compliance-based security versus data-centric security.)

All of these are considerations you can begin ignoring simply by encrypting all of your data if you’ve invested the time, money, and energy in data classification to start with or have been considering it. Frankly, many organizations have never invested the time to classify all their data due to the cost in time, money, and resources necessary to carry it out and then maintain it.[2]

Data classification overall… is time intensive and costly to produce, execute and maintain because it’s an exercise aimed at a rapidly moving and changing target.

Encrypting all data at rest resolves almost all of the data sensitivity issues data classification attempts to address, especially for new data being generated. (If you don’t know where all your data sits, you still have to undergo the discovery exercise, but encrypting and protecting that data as you find it instead of merely “categorizing” it, eliminates a lot of concerns, risks and compliance issues after finding it.)

Conclusion: Simply Encrypt Everything

In conclusion, data is simply proliferating at such a tremendous rate, onto and into too many surfaces within the enterprise to make keeping up with it and merely categorizing it a seamless, easy, and worthwhile exercise. Data is not only proliferating on-prem, in the cloud, and on endpoints – it’s proliferating within multiple data centers in most organizations and in many clouds and many types of end-points (i.e. employee, partner, vendor, and agency endpoints) as most organizations seek to address zero downtime and business continuity concerns by adopting and developing heterogeneous cloud strategies to drive operational and to-scale efficiencies.

Meanwhile, enterprise DAR encryption has reached an operational robustness and maturity level that allows many organizations to dispense with the time-consuming, costly, and ongoing need for data classification and “protecting only sensitive data.” Recent breaches and data leaks have all but proven that all data now represents value to the organization and is therefore sensitive. It’s simply a matter of marrying your data, once stolen, to the right economy in order to extract that value and bring damage and harm to your organization – anything from names to email addresses to even sexual orientation can and will be used against your organization as we’ve seen over the last 2-3 years.

By encrypting everything, these concerns around data sensitivity, where data lives and the classification exercise begin to evaporate. Taking an encrypt everything approach releases organizations to better and more quickly address expansion needs and develop and adopt efficient and cost-effective operational and scaling strategies while simultaneously and immediately addressing risk rather than waiting for a catchup exercise that paints a still-ambiguous picture of risk within the organization.

At the micro-level, in the case of transparent encryption with strong access controls that blind privileged users to actual information, you can actually begin modeling your data in a way never before possible that truly addresses risk – into secured silos or citadels of information that out-of-the-box (OOTB) controls can never provide your organization with the power and ability to accomplish. This is how data should ideally have always been modeled and rolled out all along, all but eliminating insider abuse and theft and eliminating breach threats based on the elevation of access. (More on this in an upcoming whitepaper!)

Questions? Concerns? Disagree? Let me know what you think in the comments below or, as always, feel free to engage with me on Twitter @ChrisEOlive.

________________________

Footnotes:

[1] To understand why full disk encryption (FDE) doesn’t address any risk other than physical theft or loss of device, read FDE: What Is It Good For?, FDE: The Right Tool for the Wrong Job and FDE is Physical Security not IT Security.

[2] Are there other benefits to data classification? Strictly and theoretically speaking, yes. But I believe the returns on that costly exercise are diminishing rapidly as (1) encryption can now be quickly and transparently applied to all data, (2) storage costs plummet and storage becomes ubiquitous, further abstracted, and commoditized, and (3) cloud computing continues to displace on-prem data center (DC) operations and the need for organizations to seek DC operational and storage efficiencies. In the face of these industry transformations, data sensitivity against the backdrop of the past obstacles around encryption has remained almost the sole reason for the continued existence of data classification. And this remaining reason has now all but been removed based on transparent encryption and almost all enterprise data having become categorically sensitive.